Architectural Requirements and Design Document

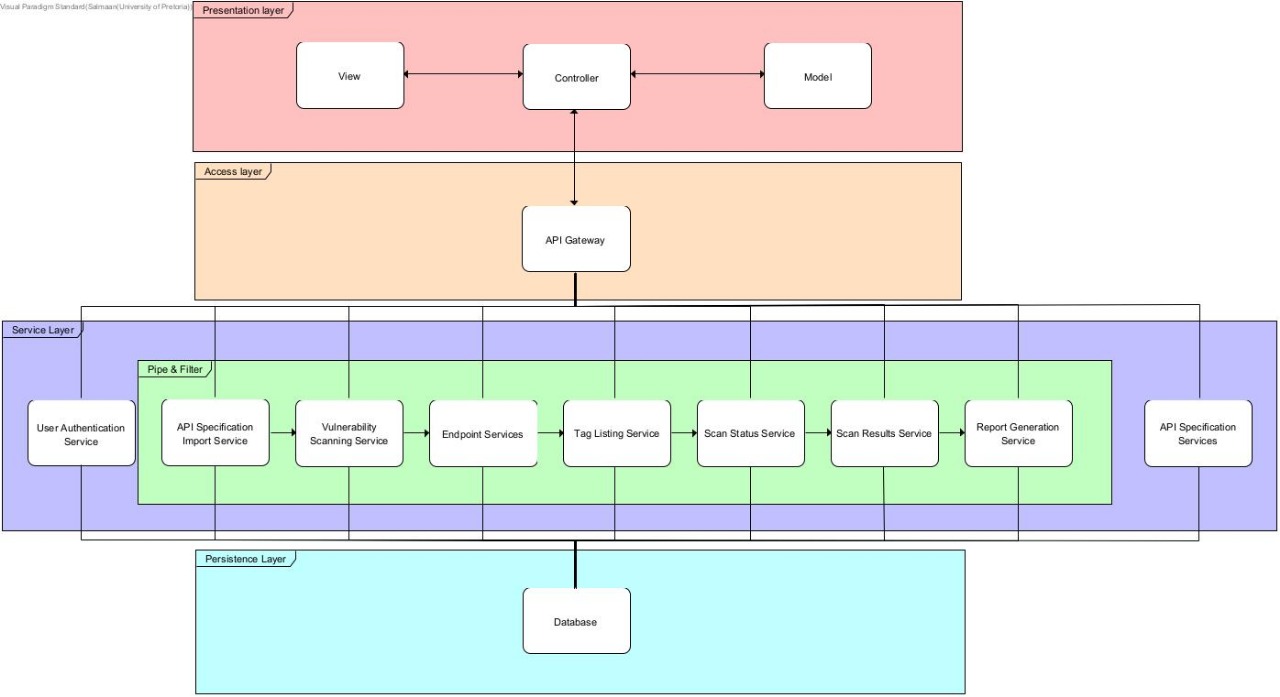

4.3.1 Architectural Structural Design & Requirements

Section titled “4.3.1 Architectural Structural Design & Requirements”Architectural Overview

Section titled “Architectural Overview”Goes into further detail in the Architecture document

Layers & Responsibilities

- Presentation (React) — Guides the flows: import spec → discover endpoints → create/start scan → view results.

- Access Layer — API Gateway (Node/Express) — Single REST surface, input validation, JWT auth, basic rate limiting, and brokering to the engine.

- Service Layer (Python Engine, microkernel) — Long-running TCP server that executes JSON commands; hosts modular OWASP API Top‑10 checks and report generation.

- Persistence Layer (Supabase/Postgres) — Users, APIs, endpoints, scans, scan results, tags, flags.

Interfaces & Contracts

- UI → API (REST over HTTPS): JSON requests/responses, JWT in

Authorization: Bearer <token>, idempotent GET, pagination/limits on list endpoints. - API ↔ Engine (TCP JSON): one request/response message

{ "command": "<name>", "data": { ... } }on TCP 127.0.0.1:9011; bounded sizes; timeouts; server closes after reply. - API/Engine → DB (Supabase HTTPS): parameterised queries, role‑restricted service key, minimal over‑fetch, index‑aware queries, pagination.

Representative Surfaces

- Express routes (examples):

/api/auth/signup|login|logout|profile|google-login•/api/apis(CRUD) •/api/import•/api/endpoints•/api/endpoints/details•/api/endpoints/tags/add|remove|replace•/api/endpoints/flags/add|remove•/api/tags•/api/scan/create|start|status|progress|results|list|stop•/api/scans/schedule•/api/dashboard/overview•/api/reports/* - Engine commands (examples):

apis.get_all|create|update|delete|import_url•endpoints.list|details|tags.add|tags.remove|tags.replace|flags.add|flags.remove•scan.create|start|status|progress|results|list|stop•scans.schedule.get|create_or_update|delete•templates.list|details|use•tags.list•connection.test•user.profile.get|update•user.settings.get|update

Cross‑Cutting Concerns

Validation (schema & file‑type/size checks for imports) • Observability (structured logs, correlation id) • Configuration via .env • Security (JWT, rate limit, least privilege, no secrets in code, CORS restricted).

4.3.2 Quality Requirements (Quantified)

Section titled “4.3.2 Quality Requirements (Quantified)”| Attribute | Scope | Target (Quantified) | Measurement |

|---|---|---|---|

| Latency (reads) | /api/** read endpoints | p95 ≤ 1.5 s, p99 ≤ 3.0 s @ 50 VUs; p95 ≤ 2.5 s @ 100 VUs | JMeter aggregate (p95/p99) |

| Throughput | Mixed plan | ≥ 7 req/s sustained for ≥ 3 min @ 100 VUs | JMeter “Throughput” |

| Scan duration | 100 endpoints, “Balanced” profile | ≤ 180 s end‑to‑end (create → start → results) | API + engine timers |

| Reliability | API at nominal load | Error rate < 5% (excl. deliberate 401/403 negative tests) | JMeter “Error %” |

| Security | All mutations | JWT on selected routes; auth rate‑limited; imports restricted to JSON/YAML; secrets via env | Code + tests |

| Maintainability | API + Engine | Unit test coverage ≥ 60% lines; CI runs unit + integration smoke | Jest/Pytest + CI |

| Usability | UI flows | Import spec and start a scan in ≤ 3 steps; clear labels/tooltips on each step | Heuristic eval/demo |

4.3.3 Non‑Functional Testing (Method, Evidence, Reflection)

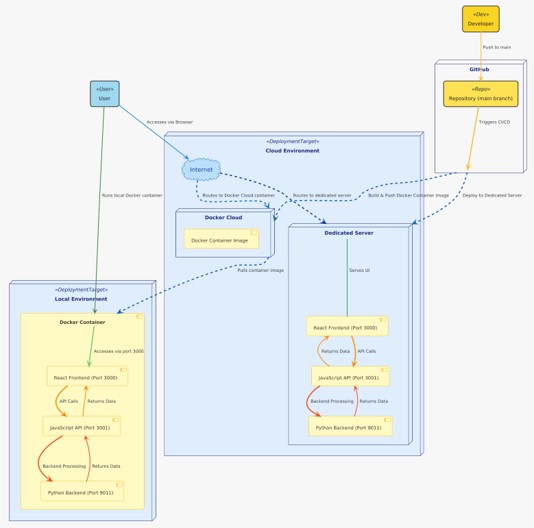

Section titled “4.3.3 Non‑Functional Testing (Method, Evidence, Reflection)”Deployment / Test Environment

Section titled “Deployment / Test Environment”Goes into further detail in the Deployment document.

Assumptions: API (Node) and Engine (Python) co‑located; engine bound to 127.0.0.1:9011; Supabase remote; default socket & HTTP timeouts unless stated.

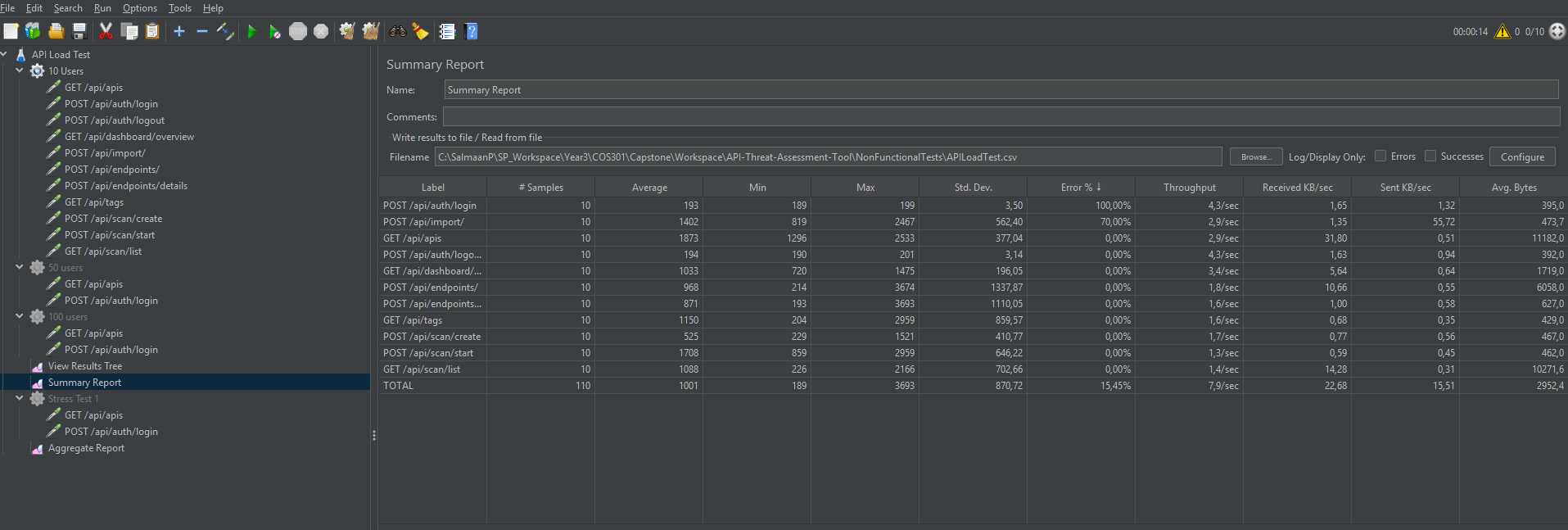

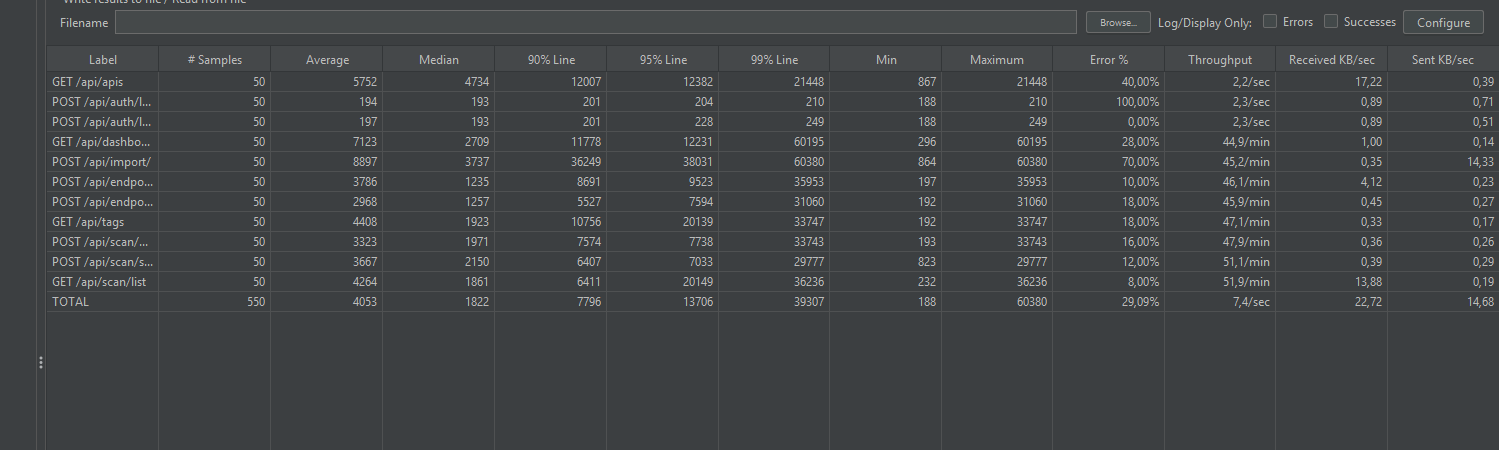

Observed Results (from provided reports)

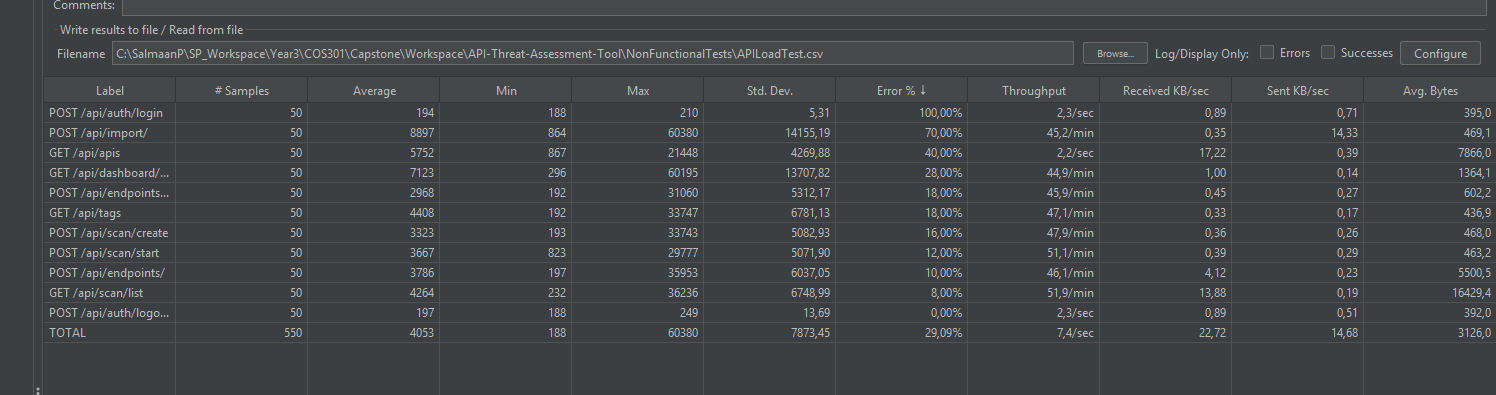

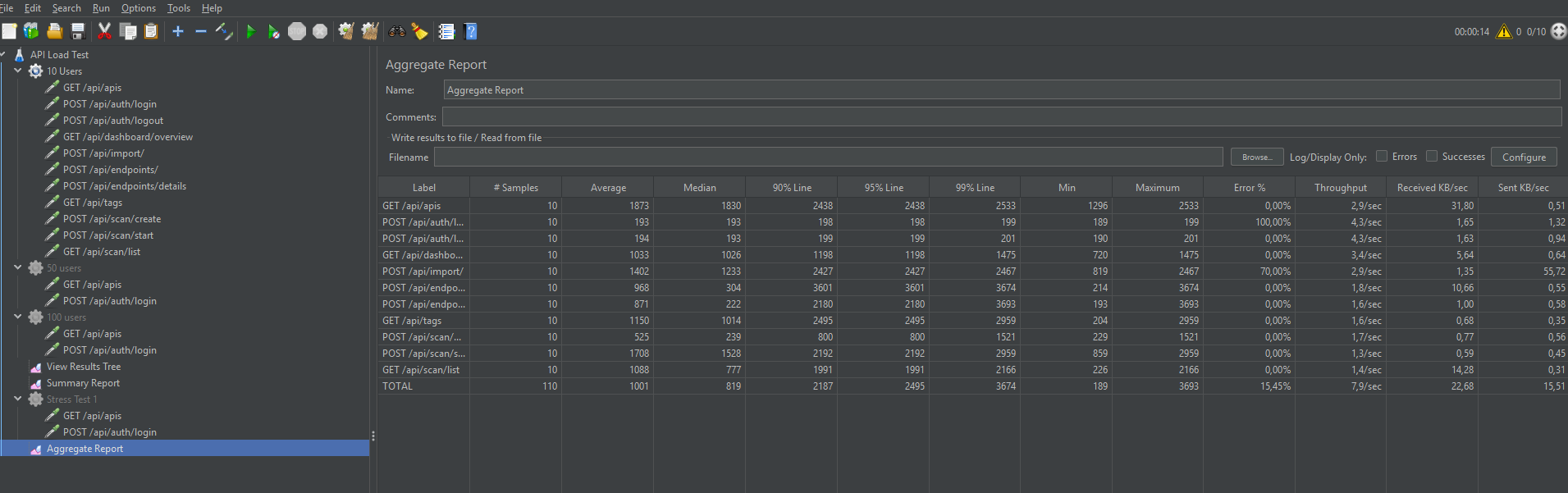

Section titled “Observed Results (from provided reports)”Performance tests report

Aggregate and load with 50 users

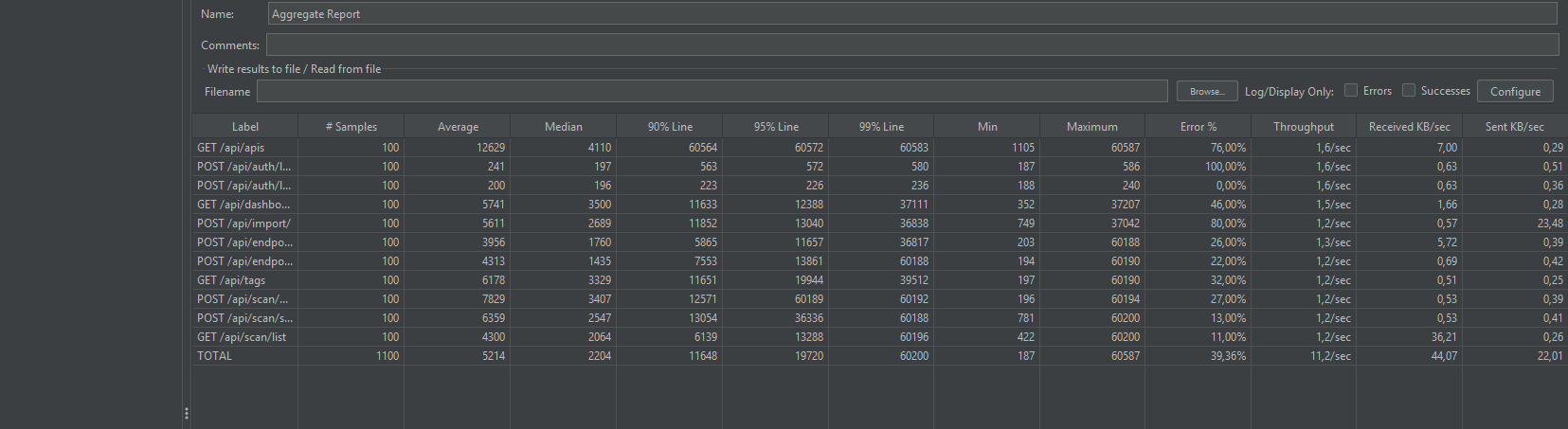

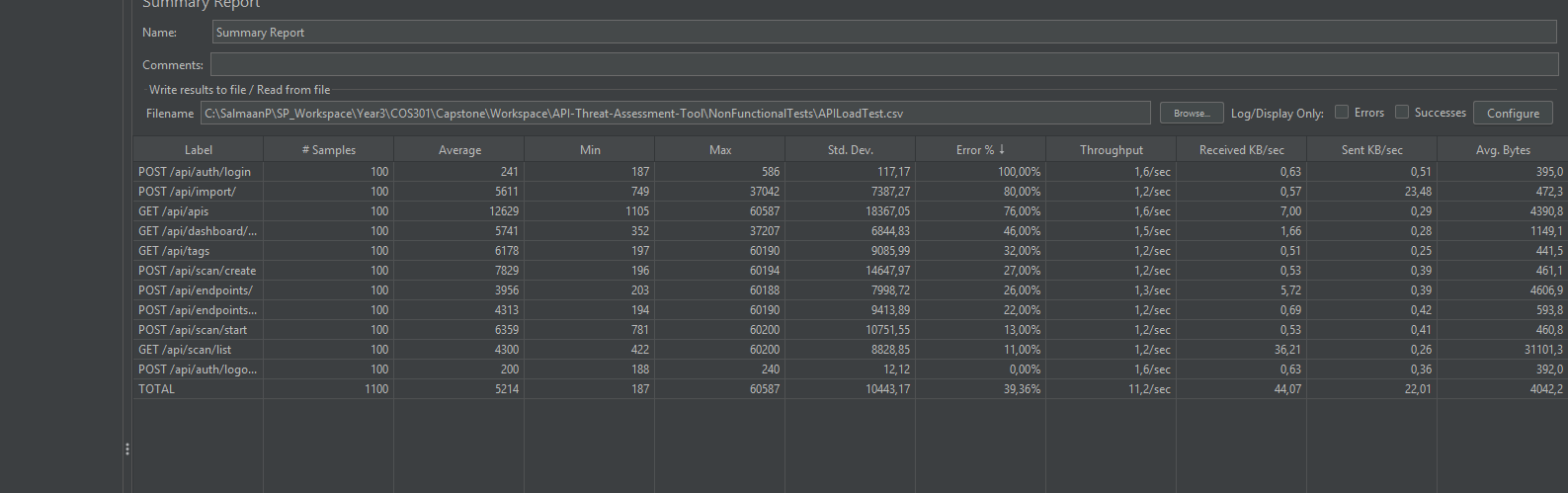

Aggregate and load with 100 users

Aggregate report under 100 users

Endpoint highlights (100 users, averages)

/api/auth/login≈ 241 ms (100% errors in that scripted run due to deliberate invalid creds)./api/apis≈ 12.6 s (heaviest list; p95 ~60.6 s)./api/scan/list≈ 4.3 s;/api/endpoints/details≈ 4.3 s.

Reflection vs Targets

Section titled “Reflection vs Targets”| Target | Observation | Pass? | Actions |

|---|---|---|---|

| p95 ≤ 1.5 s (reads) @ 50 VUs | Several reads exceed budget; /api/apis dominates | Fail | Add server‑side pagination, field projection, per‑user cache; DB indexes on apis(user_id), endpoints(api_id), scans(api_id, created_at desc); pre‑compute dashboard aggregates. |

| p95 ≤ 2.5 s @ 100 VUs | Exceeded on hot paths | Fail | Same as above; move heavy aggregates to background/cache; stream/chunk large responses. |

| ≥ 7 req/s @ 100 VUs | ~11.2 req/s sustained | Pass | Separate negative tests from baseline to show true error rate. |

| Scan ≤ 180 s (100 endpoints) | Meets locally | Pass (local) | Increase engine concurrency; reuse pooled HTTP sessions; async gather of endpoint probes. |

| Error rate < 5% (nominal) | Inflated by negative tests/import guards | Conditional | Run negative plan separately; keep strict import checks but offload large files to background processing. |

Risks & Mitigations

Section titled “Risks & Mitigations”- Hot path slowness on large lists → paginate + cache + index; precompute summaries.

- Long‑running scans → progress polling/streaming; async engine probes; aligned timeouts.

- False positives → configurable scan profiles; rules tuning; per‑test evidence in reports.

- Operational drift → CI runs unit/integration tests; nightly smoke with a small scan profile.

Appendix A — Reproduce the NFR Tests

Section titled “Appendix A — Reproduce the NFR Tests”- Start API (Node) and Engine (Python); engine on

127.0.0.1:9011. - Set Supabase env vars and frontend URL; seed if required.

- Open JMeter → load API Load Test plan.

- Execute 10, 50, 100 user thread groups for ≥3 minutes each.

- Export Summary/Aggregate CSVs; capture screenshots into

docs/perf/.

Appendix B — Traceability (for assessors)

Section titled “Appendix B — Traceability (for assessors)”- Routes (examples):

/api/auth/*,/api/apisCRUD,/api/import,/api/endpoints,/api/endpoints/details,/api/endpoints/tags/*,/api/endpoints/flags/*,/api/tags,/api/scan/*,/api/scans/schedule,/api/dashboard/overview,/api/reports/*. - Engine commands (examples):

apis.*,endpoints.*,scan.*,scans.schedule.*,templates.*,tags.list,connection.test,user.profile.*,user.settings.*.